MobileNet v2 Using PyTorch | Deep Learning Assignment Help

- realcode4you

- Jun 1, 2021

- 7 min read

Project Requirement:

Following are the details :

1. Download a subset of the Simple Concept DataBase (SCDB) dataset. 2. Train a MobileNet v2 on the dataset using PyTorch and report your best performance. 3. Take 5 samples from the test set and compute GradCAM, ScoreCAM and Occlusion Sensitivity maps for each. 4. Randomize the trained network’s weights up until (including) the fourth bottleneck layer (c=64) and compute saliency maps for the same 5 samples again. 5. Briefly present your results in a short report.

Code Script

Load Dataset

import os

import zipfile

local_zip = '/content/data.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tem')Import Libraries

#let's import all the libraries:

# all pytorch libraries

import torch

import torchvision as tv

from torchvision import datasets, transforms

from torch import optim

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import Subset

# all necessary libraris

import numpy as np

import pandas as pd

import os

import matplotlib.pyplot as plt

import seaborn as sns

import shutil

from PIL import Image

from sklearn.metrics import f1_score, confusion_matrix

from sklearn.model_selection import train_test_split# Checkwhich device you're using

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu:0")

print(device)data_dir = '/tem/data/TrainImages'

augmentations = [transforms.Resize((224,224)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])]

dataset = datasets.ImageFolder(data_dir, transform=transforms.Compose(augmentations))

print(len(dataset))

def train_val_dataset(dataset, val_split=0.10):

train_idx, val_idx = train_test_split(list(range(len(dataset))), test_size=val_split)

my_dataset = {}

my_dataset['train'] = Subset(dataset, train_idx)

my_dataset['val'] = Subset(dataset, val_idx)

return my_dataset

my_dataset = train_val_dataset(dataset)

print(len(my_dataset['train']))

print(len(my_dataset['val']))

# The original dataset is available in the Subset class

print(my_dataset['train'].dataset)

Output:

960 864 96 Dataset ImageFolder Number of datapoints: 960 Root location: /tem/data/TrainImages StandardTransform Transform: Compose( Resize(size=(224, 224), interpolation=bilinear) RandomHorizontalFlip(p=0.5) ToTensor() Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) )

BATCH_SIZE = 64

dataloaders = {x: torch.utils.data.DataLoader(my_dataset[x], batch_size=BATCH_SIZE, shuffle=True, num_workers=1) for x in ['train', 'val']}classes = list(my_dataset['train'].dataset.class_to_idx.keys())

dataiter = iter(dataloaders['train'])

dataset_sizes = {x: len(my_dataset[x]) for x in ['train', 'val']}

images, labels = dataiter.next()

print(images.shape)

print(labels.shape)

Output:

torch.Size([64, 3, 224, 224]) torch.Size([64])

classes,dataset_sizesOutput:

(['0', '1'], {'train': 864, 'val': 96})

dataloadersOutput:

{'train': <torch.utils.data.dataloader.DataLoader at 0x7fe6e0051750>, 'val': <torch.utils.data.dataloader.DataLoader at 0x7fe6e0051cd0>}

#images has 32 images from our train dataloader

images[31] , labels[31]Output:

(tensor([[[-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179], [-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179], [-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179], ..., [-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179], [-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179], [-2.1179, -2.1179, -2.1179, ..., -2.1179, -2.1179, -2.1179]], [[-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357], [-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357], [-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357], ..., [-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357], [-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357], [-2.0357, -2.0357, -2.0357, ..., -2.0357, -2.0357, -2.0357]], [[-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044], [-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044], [-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044], ..., [-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044], [-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044], [-1.8044, -1.8044, -1.8044, ..., -1.8044, -1.8044, -1.8044]]]), tensor(0))

tnsn_np = images[31].numpy()

plt.imshow(np.transpose(tnsn_np,(1,2,0)))Output:

def img_display(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

npimg = np.transpose(npimg, (1, 2, 0))

return npimg

classes = {0: '0', 1: '1'}

# Viewing data examples used for training

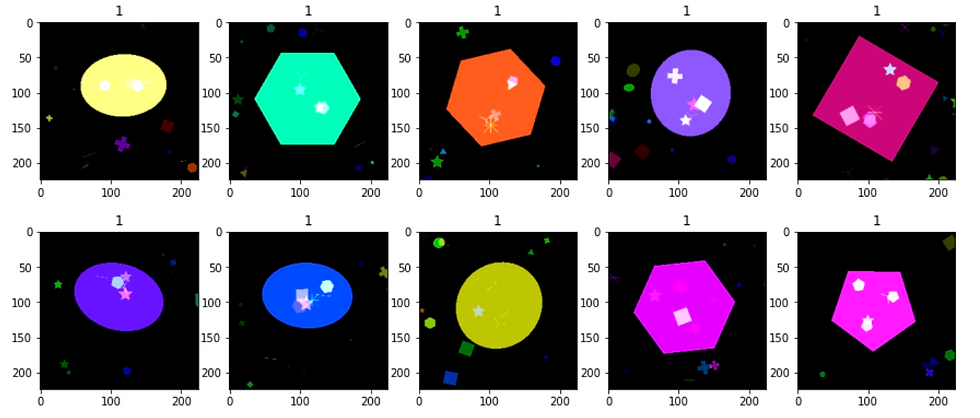

fig, axis = plt.subplots(3, 5, figsize=(15, 10))

for i, ax in enumerate(axis.flat):

with torch.no_grad():

image, label = images[i], labels[i]

ax.imshow(img_display(image))

ax.set(title ="{}".format(classes[label.item()]))Output:

Checking Image shape:

images.shapeOutput:

torch.Size([64, 3, 224, 224])

Fit Mobile Net V2 Model

import torch

model_ft = torch.hub.load('pytorch/vision:v0.9.0', 'mobilenet_v2', pretrained=True)

Result after run:

HBox(children=(FloatProgress(value=0.0, max=14212972.0), HTML(value='')))

Check model:

model_ftOutput:

MobileNetV2( (features): Sequential( (0): ConvBNActivation( (0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False) (1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=32, bias=False) (1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1), bias=False) (2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (2): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(16, 96, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(96, 96, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=96, bias=False) (1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(96, 24, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (3): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(144, 144, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=144, bias=False) (1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(144, 24, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (4): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(144, 144, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=144, bias=False) (1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(144, 32, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (5): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (6): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (7): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(192, 192, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=192, bias=False) (1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (8): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (9): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (10): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (11): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False) (1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(384, 96, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (12): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(576, 576, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=576, bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(576, 96, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (13): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(576, 576, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=576, bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(576, 96, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (14): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(576, 576, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=576, bias=False) (1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(576, 160, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (15): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (16): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (17): InvertedResidual( (conv): Sequential( (0): ConvBNActivation( (0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (1): ConvBNActivation( (0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False) (1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) (2): Conv2d(960, 320, kernel_size=(1, 1), stride=(1, 1), bias=False) (3): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (18): ConvBNActivation( (0): Conv2d(320, 1280, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(1280, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU6(inplace=True) ) ) (classifier): Sequential( (0): Dropout(p=0.2, inplace=False) (1): Linear(in_features=1280, out_features=1000, bias=True) ) )

Check the features:

print("model_ft")

for i, param in enumerate(model_ft.named_parameters()):

pass

print(i, param[0], param[1].requires_grad)

# param.requires_grad = False

Output:

model_ft 0 features.0.0.weight True 1 features.0.1.weight True 2 features.0.1.bias True 3 features.1.conv.0.0.weight True 4 features.1.conv.0.1.weight True 5 features.1.conv.0.1.bias True 6 features.1.conv.1.weight True 7 features.1.conv.2.weight True 8 features.1.conv.2.bias True 9 features.2.conv.0.0.weight True 10 features.2.conv.0.1.weight True 11 features.2.conv.0.1.bias True 12 features.2.conv.1.0.weight True 13 features.2.conv.1.1.weight True 14 features.2.conv.1.1.bias True 15 features.2.conv.2.weight True 16 features.2.conv.3.weight True 17 features.2.conv.3.bias True 18 features.3.conv.0.0.weight True 19 features.3.conv.0.1.weight True 20 features.3.conv.0.1.bias True 21 features.3.conv.1.0.weight True 22 features.3.conv.1.1.weight True 23 features.3.conv.1.1.bias True 24 features.3.conv.2.weight True 25 features.3.conv.3.weight True 26 features.3.conv.3.bias True 27 features.4.conv.0.0.weight True

-------

If you need complete solution of this problem then send your request at below contact details and get instant help soon with an affordable price.

At realcode4you you can get help in different machine learning project assignment related to;

Computer Vision

Natural language Processing

Data Visualization In Machine Learning

And More other

Send your request to get instant help:

realcode4you@gmail.com

Comments